The prevalence of bad bot traffic surged for the fifth consecutive year in 2023, raising concerns about the state of internet security. According to recent data, bad bots – automated software designed to perform malicious tasks – made up 32% of all internet traffic, marking a 1.8% increase from 2022.

Experts attribute this rise to the growing use of Artificial Intelligence (AI) and Large Language Models (LLMs), which are increasingly being exploited by bad actors to enhance their bots’ capabilities.

While the proportion of good bot traffic, including helpful automation tools, also saw a slight rise, increasing from 17.3% in 2022 to 17.6% in 2023, it wasn’t enough to offset the dominance of malicious bots.

This shift means nearly half of all internet traffic in 2023 – 49.6% – came from bots, leaving human traffic at just 50.4%, a notable drop. The continuing uptick in bad bot activity has sparked calls for improved defenses to protect against this growing threat.

As the line between good and bad bot traffic continues to blur, the need for more robust security measures is becoming increasingly urgent.

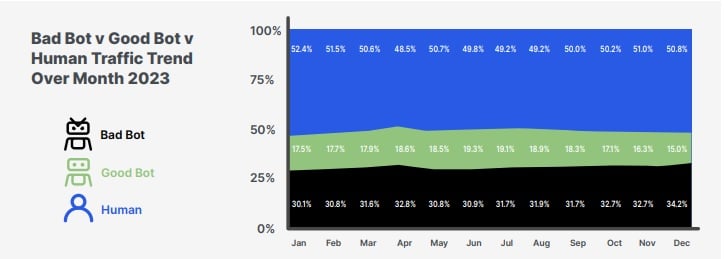

Monthly Bad Bot Traffic Levels

Automated Internet Traffic Surpasses Human Activity During Key Months in 2023

A monthly trend analysis of internet traffic in 2023 reveals a surprising shift: automated traffic outpaced human activity in four separate months over the year marking a notable milestone and underscoring the growing dominance of bots—both good and bad—on the internet.

The most significant spike occurred in December when bad bot traffic reached 34.2%. This surge coincided with a noticeable drop in human internet activity, likely due to the holiday season.

The increase in bad bot traffic during this time is also linked to a higher volume of cyberattacks recorded that month, as bad actors exploited the reduced vigilance of users.

These trends highlight the evolving nature of internet traffic, where non-human actors increasingly dominate, raising concerns about security and the long-term impact on the digital ecosystem.

Bad Bot Traffic Hits Record High, Reversing Early Gains in Human Activity

The composition of internet traffic has shifted dramatically over the past decade, with bad bot activity reaching unprecedented levels in 2023.

According to data, 32% of all internet traffic in 2023 was generated by bad bots, marking the highest proportion ever recorded and continuing a steady upward trend since 2019.

In 2013, bad bots accounted for just 23.6% of internet traffic, while human activity dominated with 57%. Good bots, which include helpful tools like search engine crawlers, made up 19.4% of traffic at that time.

A significant change occurred in 2014 when good bot traffic surged to 36.32%, driven by more aggressive indexing by search engines.

This helped offset bad bots, which saw a temporary decline. By 2015, bad bot traffic had dropped to its lowest level, coinciding with a rise in human activity to 54.4%, as new internet users from countries like China, India, and Indonesia joined the digital space.

The years 2016 and 2018 also saw relatively low bad bot activity, at 19.9% and 20.4%, respectively. However, from 2019 onward, bad bot traffic began increasing steadily, culminating in the record 32% seen in 2023.

As bad bot traffic continues to climb, concerns grow over the security and integrity of the internet, with human traffic now making up only a slight majority at 50.4%. The increasing sophistication and volume of bad bots pose ongoing challenges for internet users and security professionals.

Shift in Bad Bot Sophistication

The rise of AI technology has brought significant changes to the landscape of bad bot activity, creating a clear division between sophisticated malicious bots and simpler, less advanced versions.

In 2023, simple bad bots saw a notable increase, accounting for 39.6% of all bad bot traffic, up from 33.4% in 2022 and 26.3% five years ago.

This trend suggests that more malicious actors are relying on basic tools, such as AI-generated bot scripts, rather than deploying advanced techniques.

At the same time, moderately sophisticated bad bots have been on a steady decline, dropping from 15.3% of bad bot traffic in 2022 to just 12.4% in 2023. If this trend continues, moderately sophisticated bots may become less prevalent in the future.

Advanced bad bots, however, still dominate the landscape, though their share of total bad bot traffic decreased from 51.2% in 2022 to 48.1% in 2023. Evasive bots, which include both moderate and advanced versions, made up 60.5% of all bad bot traffic in 2023, down from 66.6% the previous year.

Despite the decline in moderate bots and evasive bad bot traffic, the sophistication of malicious bots remains a pressing concern, with constant advancements and the introduction of new evasion techniques.

Mobile User Agents Now Make Up Nearly Half of Bad Bot Traffic

Bad bots are increasingly disguising themselves as mobile user agents, accounting for 44.8% of all bad bot traffic in 2023, up sharply from 28.1% in 2020.

Ths surge reflects a growing trend of bad bots mimicking human-like behaviors, especially as mobile devices now generate over 55% of global internet traffic as of early 2024.

There are two key factors driving this shift. First, with more users accessing the internet via smartphones, bad bots are adopting mobile user agents to blend in with legitimate traffic patterns.

By posing as mobile users, these bots can evade detection and mirror typical browsing activity, making them harder to identify.

The second reason for this increase lies in privacy features embedded in certain mobile browsers, particularly Mobile Safari.

These browsers often provide enhanced privacy controls that limit the information they send to websites, making it more difficult to track and identify the bots behind the traffic. This reduced data visibility makes it easier for bad bots to conceal their true identities.

Meanwhile, bad bots posing as desktop-based user agents, such as those using Chrome, Firefox, Safari, or Edge, have seen a decline—from 68% in 2020 to 54% in 2023. A small portion of bad bot traffic, 1.2%, comes from other sources, including devices like PlayStation, Nintendo, and Smart TVs.

As bad bots evolve, security teams must adapt to counter the growing threat posed by mobile-based bot traffic, which has become a favored disguise for malicious actors.

Bad Bots in the Age of Artificial Intelligence: A Growing Challenge

The rise of artificial intelligence (AI) and Large Language Models (LLMs) is transforming industries and daily life, offering new efficiencies and conveniences. However, this technological evolution has also introduced new challenges, particularly in the realm of internet traffic and data ethics.

As AI and LLMs become more prevalent, they bring with them an increasing volume of automated traffic, particularly from bad bots.

These advanced technologies have not only changed how businesses operate but have also reshaped the internet’s traffic profile. Bad bots, both simple and sophisticated, now account for a significant portion of online activity, as outlined in the executive summary of recent reports.

While the distinction between basic and advanced bots continues to evolve, a more complex issue has reemerged: the legality of web scraping.

Web scraping, the automated extraction of data from websites, has long been a contentious topic. However, the rapid rise of AI and LLMs has reignited the debate.

These models rely heavily on large datasets for training, much of which is obtained through scraping. While web scraping fuels AI development, it raises substantial legal and ethical questions about data use, ownership, and privacy.

The legality of web scraping varies depending on the jurisdiction and the circumstances under which it occurs. Proponents argue that scraping is essential for advancing AI technologies, providing the massive data sets required for model training.

Critics, however, contend that this practice infringes on intellectual property rights, with organizations claiming their proprietary content is being scraped without permission.

At the heart of the debate is the tension between innovation and privacy. On one side, AI developers emphasise the need for open access to data to push technological boundaries.

On the other, businesses and content creators argue that scraping violates copyright laws and personal privacy. As AI continues to evolve, the laws governing web scraping—many of which are outdated and inconsistent across countries—are struggling to keep pace.

This renewed debate highlights the need for updated regulations that balance the need for innovation with the protection of proprietary content.

As AI and LLMs continue to advance, finding a legal and ethical framework that fosters both technological growth and data privacy will be crucial.

- A Ground-Breaking Legal Case

In a pivotal legal case, The New York Times filed a lawsuit against OpenAI and Microsoft, accusing them of copyright infringement by using web scraping to train AI models.

The lawsuit underscores a growing conflict between AI development and intellectual property rights. At the core of the dispute is the question of whether scraping copyrighted content to train AI models falls under the “fair use” doctrine of the US Copyright Act.

OpenAI argues that its use of The Times’ content is protected by fair use, a legal principle that allows limited use of copyrighted material for purposes like commentary or education.

However, The New York Times claims that OpenAI’s application does not meet the “transformative” requirement, which is a key condition for fair use, as the AI models are not creating something sufficiently new from the original content.

The outcome of this lawsuit has far-reaching implications. It could redefine the boundaries of copyright laws in the age of AI, setting a critical precedent for the legality of using copyrighted material to train AI models.

As more organisations rely on massive datasets to advance AI technologies, the case highlights the tension between innovation and content creators’ rights.

This legal battle also points to a broader issue—the urgent need for updated copyright laws that better balance the rights of content creators with the demands of AI development.

Australia continues to be a prime target for bad bots accounting for 8.4% of all attacks globally

Australia has emerged as a significant target for malicious bots, accounting for 8.4% of all global bot attacks, trailing only the United States and the Netherlands.

Recent reports indicate that malicious bot traffic in Australia has surged to 30.2% in 2023, raising concerns among cybersecurity experts.

As of 2024, bots—both beneficial and harmful—now make up 36.4% of the nation’s total internet traffic. This increase underscores the growing challenge for Australian businesses and individuals alike, as cyber threats continue to evolve.

Experts warn that the rise in bot activity could impact online security, e-commerce, and data integrity, urging organisations to bolster their defenses against these persistent threats.