Microsoft has unveiled a new cloud-controlled hybrid infrastructure platform called Azure Local, designed for distributed locations.

The launch is part of a broader set of updates announced at the company’s Microsoft Ignite 2024 event, where it also introduced several new technologies, including its Azure Integrated Hardware Security Module (HSM) and the Azure Boost Data Processing Unit (DPU) chips.

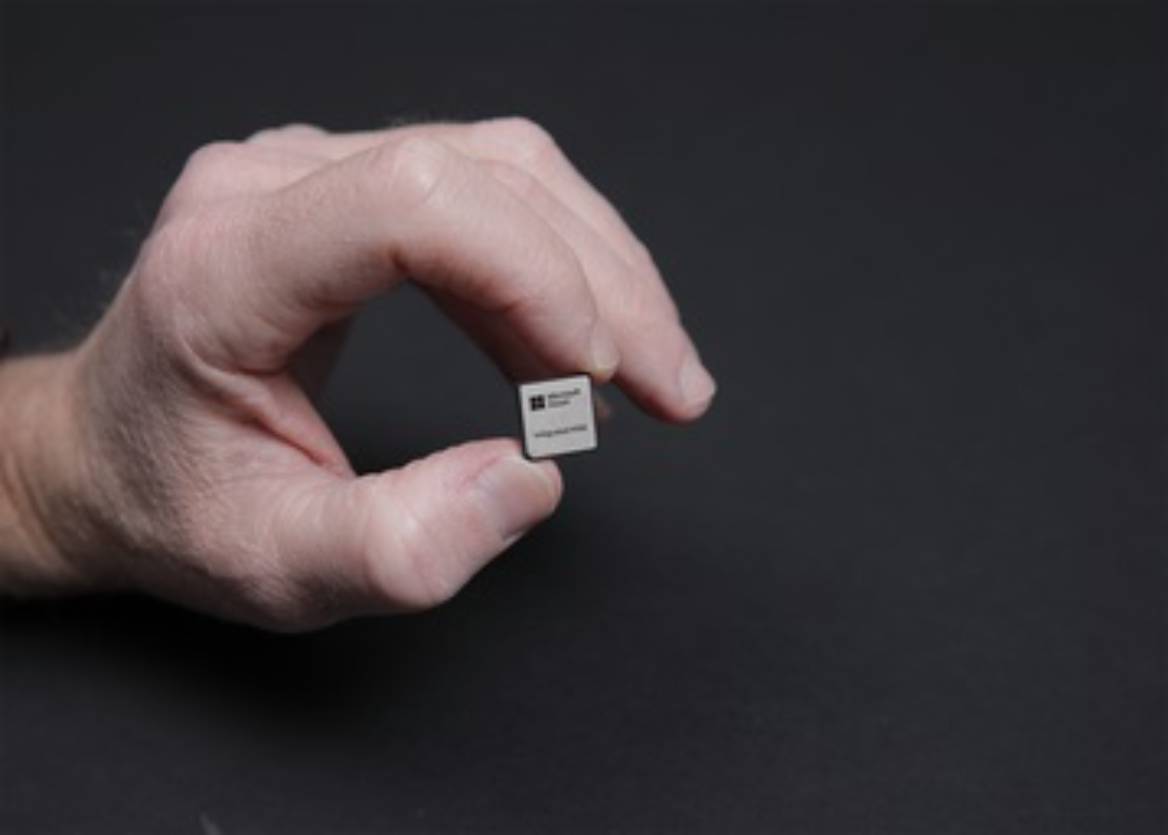

The Azure Integrated HSM provides protection globally across Azure’s data center hardware.

The Azure Integrated HSM is a cloud security chip that provides customers full administrative control and cryptographic management, ensuring that Microsoft itself cannot access or view the keys stored within.

Customers can manage access to the HSM devices within their organisation, defining roles and permissions based on need. Each HSM device is validated to high security standards, including FIPS 140-2 Level 3 and eIDAS Common Criteria EAL4+.

The HSM solution offers global protection across Azure’s data center hardware, complementing Microsoft’s existing Azure Dedicated HSM service, which hosts Thales Luna 7 HSM network appliances in Azure data centers.

On the hardware front, Microsoft introduced its Azure Boost DPU, its first in-house designed Data Processing Unit (DPU).

Tailored for data-centric workloads, the DPU is built for high efficiency and low power consumption, joining the company’s existing lineup of CPUs and GPUs in its “processor trifecta.”

Microsoft claims the new DPU servers will offer up to four times the performance of current servers for storage workloads, while consuming three times less power.

The company’s work with DPUs began last year when it acquired DPU provider Fungible in January 2023, with plans to integrate Fungible’s team into Microsoft’s data center infrastructure efforts.

By July 2023, Microsoft had announced Azure Boost, a DPU-like service designed to offload key virtualisation tasks, including networking, storage, and host management, onto dedicated hardware.

Additionally, Microsoft revealed that it’s continuing to innovate in cooling technologies to meet the growing demands of AI.

The company is now using a liquid cooling heat exchanger unit to cool large-scale AI systems hosted on Azure, aiming to improve cooling efficiency while retrofitting these solutions within its existing data center infrastructure.