Inspired by the growing importance of artificial intelligence (AI) in society, researchers from the University of Tokyo examined public attitudes towards the ethics of artificial intelligence.

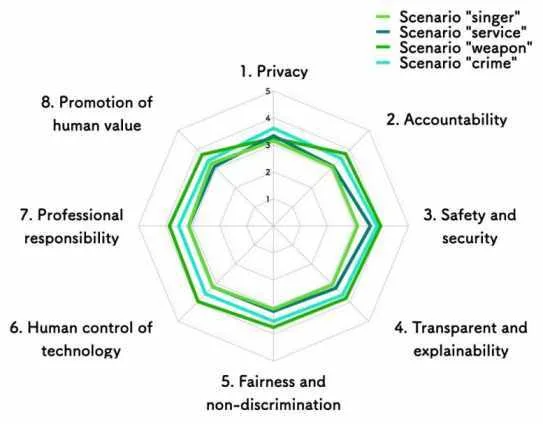

Their findings quantify how different demographic and ethical scenarios influence these attitudes. As part of this research, the team developed an octagonal visual metric analogous to a rating system that could be useful for AI researchers who want to know how their work might be perceived by the public.

Many people feel that the rapid development of technology often outstrips the social structures that implicitly guide and regulate it, such as law or ethics. AI in particular exemplifies this as it has become so pervasive in the everyday lives of many, seemingly overnight.

This proliferation, coupled with the relative complexity of AI compared to more familiar technology, can breed fear and distrust of this key component of modern life.

Researchers at the University of Tokyo, led by Professor Hiromi Yokoyama of the Kavli Institute for the Physics and Mathematics of the Universe, have begun to quantify public attitudes towards ethical issues surrounding AI. There were two questions, in particular, the team, through analysis of surveys, sought to answer: how attitudes change depending on the scenario presented to an interviewee, and how the demographics of the interviewee himself changed attitudes.

Ethics cannot really be quantified, so to measure attitudes towards AI ethics, the team employed eight themes common to many AI applications that raised ethical questions: privacy, accountability, safety and security, transparency and explainability, fairness. and non-discrimination, technology control, professional responsibility and the promotion of human values.

These, which the group called “octagonal dimensions,” were inspired by a 2020 article by Harvard University researcher Jessica Fjeld and her team.

Survey respondents were given a set of four scenarios to judge against these eight criteria. Each scenario looked at a different application of AI. These were AI-generated art, customer service AI, autonomous weapons, and crime prediction.

Survey respondents also provided researchers with information about themselves, such as age, gender, occupation, and level of education, as well as a measure of their interest in science and technology through an additional set of questions. This information was essential for researchers to see what characteristics of people would correspond to certain attitudes.

“Prior studies have shown that risk is perceived more negatively by women, older people, and those with more subject knowledge. I was expecting to see something different in this survey given how commonplace AI has become, but surprisingly we saw similar trends here,” said Yokoyama. “Something we saw that was expected, however, was how the different scenarios were perceived, with the idea of AI weapons being met with far more skepticism than the other three scenarios.”

The team hopes the results could lead to the creation of some kind of universal scale to measure and compare ethical issues related to AI. This survey was limited to Japan, but the team has already started collecting data from several other countries.

“With a universal scale, researchers, developers and regulators could better measure the acceptance of specific AI applications or impacts and act accordingly,” said Assistant Professor Tilman Hartwig. “One thing I discovered while developing the scenarios and questionnaire is that many topics within AI require significant explanation, more so than we realized. This goes to show there is a huge gap between perception and reality when it comes to AI.”